BIT-101

Bill Gates touched my MacBook Pro

I’ve had some kind of home server / home lab for many years. Probably going back to 2008-ish. Generally this has been just a desktop computer running some Linux distro or another and connected to the internet. For many years, this was just an extra computer I could connect to, try things out, store files I didn’t need on my laptop (it usually had a decent amount of storage). I also often used it as a desktop computer for doing things if I happened to be sitting at the desk where it was.

I used these desktops for somewhat of a “backup” but it was really just an extra copy of files in case my laptop fell off a cliff or something. A few years ago I started doing more structured, scheduled backups with restic. This worked out pretty well, but at some point it failed and I didn’t get around to fixing it for a while.

As for external connectivity, at first I was just opening up a port on my router. Then one day I got a glimpse of the traffic that was trying to access that port and shut it down quick. I dodged a bullet there. Then I set up Wireguard. I had an vps in the cloud that was the main connection point. That could route traffic/services to the home server, or any other device on the Wireguard network.

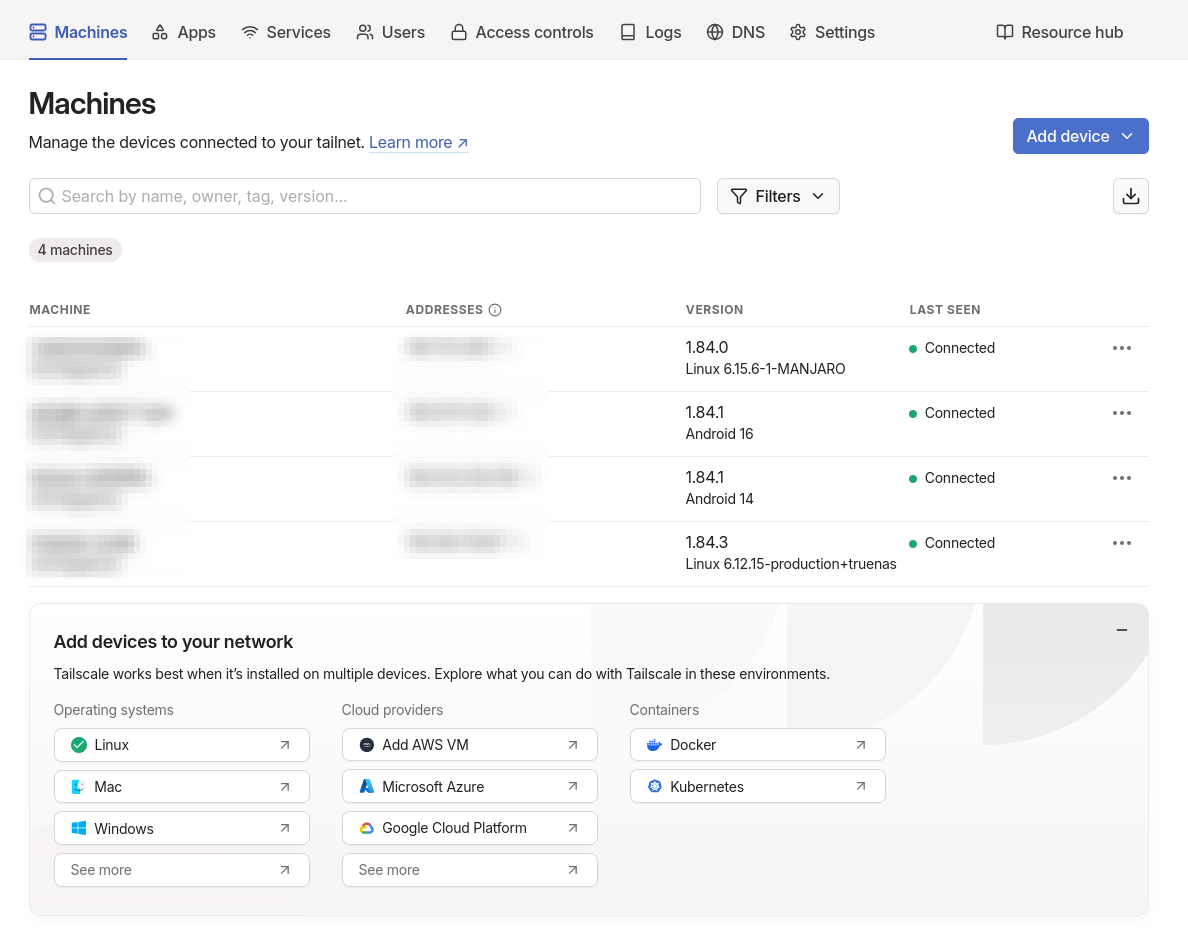

This all worked well for years and I wound up putting up various self-hosted services there. Music servers (Subsonic or compatible) and video servers (Plex, Jellyfin) mainly, but some things I created myself, and a lot of different self-hosted apps that I tested but didn’t end up keeping around very long.

A few years ago I rebuilt my home desktop with a pretty powerful setup, somewhat of a gaming rig. I decided this was overkill to run as an always-on home server. So I got a thin client HP ProDesk device for cheap.

This is a tiny device. A bit bigger than an external DVD drive. It has room for an NVME SSD and a 2.5" drive. I plugged in a couple of external USB drives and I’ve been running that as my home server for over a year. It was running Ubuntu server, so my first headless server. But the USB connections were a bit funky.

This is when I started eyeing NASes.

I wasn’t sure what the NAS experience was. So many questions. Is this something I needed? Was a headless server with storage fine? Did I really need a RAID? What apps could I install on which types of NAS?

Prebuilt NASes are expensive, even before adding drives. Even a 2-bay NAS is not cheap. And not expandable at all. Want to add a drive or a couple more? Nope. Get a new NAS with more bays. I didn’t want to sink many hundreds of dollars into this before I even knew whether it was something I needed.

So I started looking at the DIY route. After reading many articles and watching many videos, I settled on a used HP EliteDesk 800 G3 SFF for under $90 US on Amazon.

The SFF is for “small form factor”, but it’s a lot bigger than the ProDesk. Specs:

It came with Windows 10 installed, but I never even booted into that. I was looking at Unraid, TrueNAS Core and TrueNAS Scale and went for the last one. Installed Scale and it booted up. I had two 4TB external HDDs that I broke open and extracted the 2.5" drives and put them in the HP. It recognized everything and I created a pool with the two drives in a mirrored VDEV, synced some files to it, messed around with the system and got a feel for it.

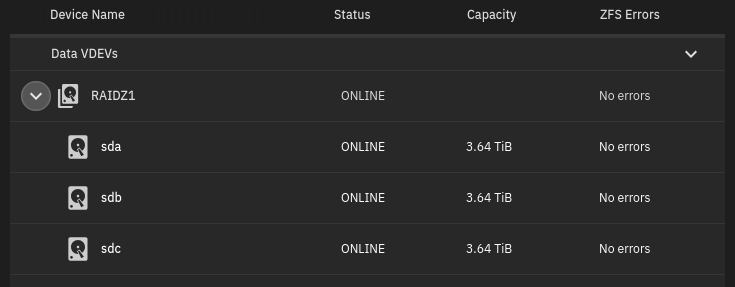

But I wanted to eventually use all three storage slots and you can’t expand a mirrored VDEV, so I figured I’d do it right from the start and ordered another 3.5" 4TB drive. When that got in, I set up a RAIDZ1 VDEV. This gave me 8TB (7.14TiB) storage, with one drive used for parity.

Most of the files I wanted were on DropBox. I set up a cloud sync to pull all those files down. It was almost 1.4TiB of data, so it took a good 48 hours (DropBox does a lot of limiting). But with all that, I’m only using 18% of my storage.

As the data was syncing, I was running into occasional read/write errors on one of my drives. It was one of the 2.5" drives, which was a couple of years old, yanked out of that external HDD case. Not really shocking. This probably explained some of the errors I’d been seeing on my previous setup. I ran some S.M.A.R.T. tests on the drives and that same drive was consistently failing. But… the errors were not happening very often. And hey… parity disk. I’m already feeling the comfort of having a NAS with a RAID. So I let the download finish and planned on replacing the drive afterwards.

The main functionality I wanted was:

I know what you’re thinking… “But that drive has errors! You need to fix it!” Yes, I ordered a drive, and started setting up my apps while I waited for that to arrive.

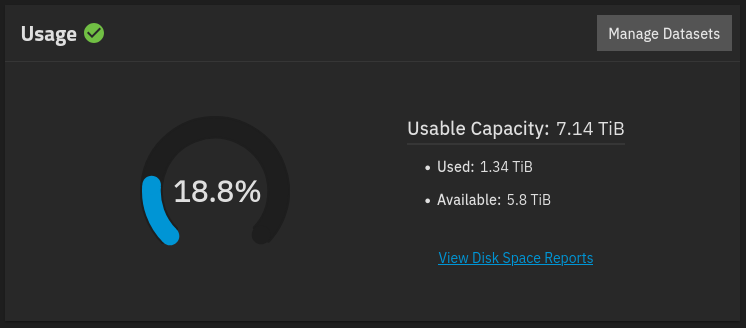

For music and video I just went with Jellyfin. I switched over to Jellyfin from Plex earlier this year, when Plex more than doubled its prices while simultaneously reducing its features. I’ve been really impressed, especially with its music service. I’d been using PlexAmp for music, which is a great app on both desktop and mobile devices. Jellyfin is not quite as full featured, but does everything I needed.

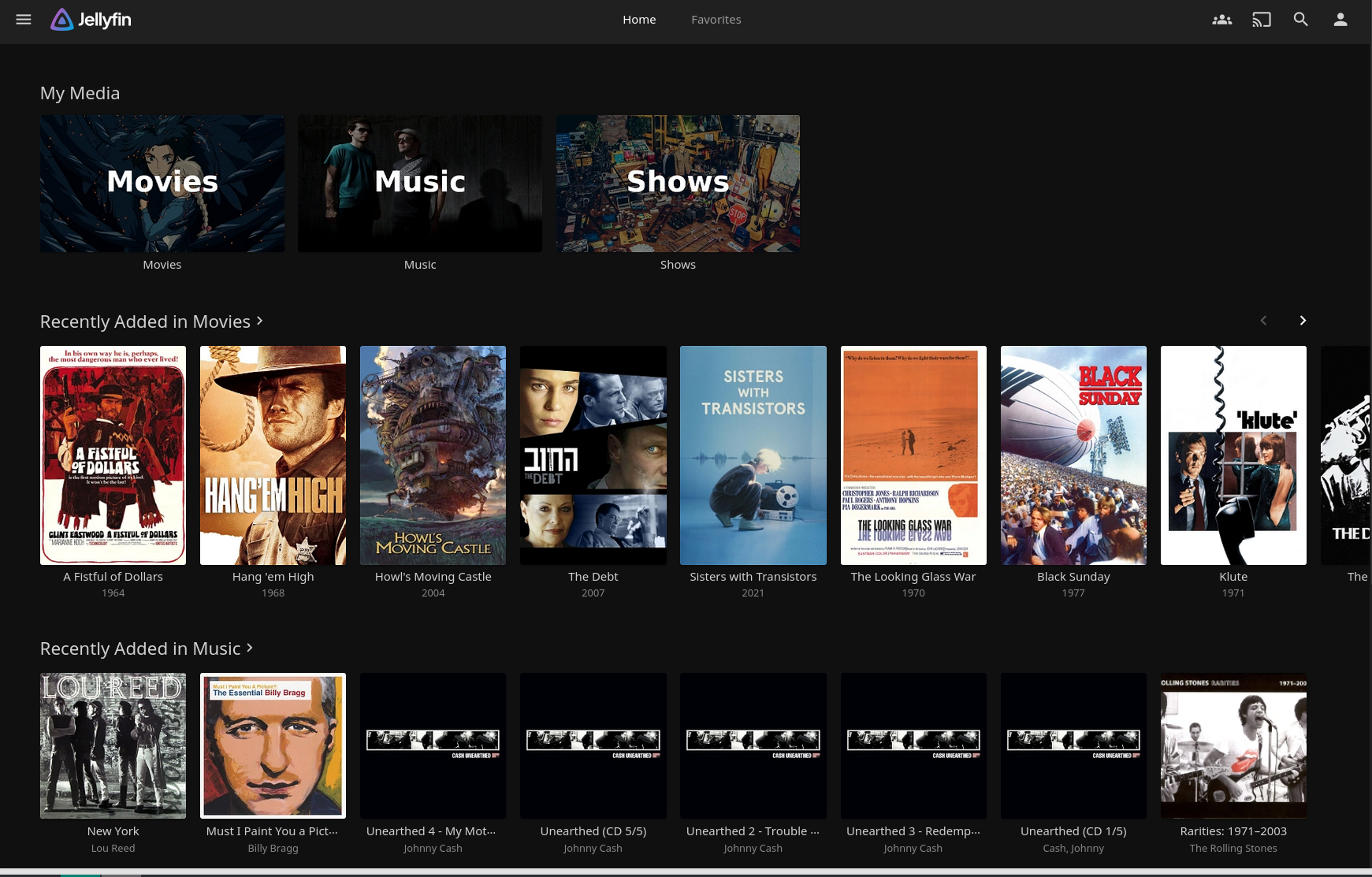

For external connectivity I switched over to Tailscale. I’d been wanting to do that for a while, but procrastinated for months just because Wireguard was working and I didn’t want to mess with something new. It turns out that Tailscale was super easy to set up - much easier than Wireguard. And is so much better. Wireguard sets up a server/client configuration, which was why I had the cloud vps as the server. But Tailscale is 100% peer to peer. Any device can access any other device. I think you might be able to do that with Wireguard, but I never figured out how. With Tailscale, that’s just the way it works out of the box. Once this was working I realized I had no need at all for the vps anymore. All my devices could talk to the NAS directly. Come to think of it, I probably could have just set up my home server as the Wireguard server and done the same thing. I think.

My Tailscale devices - Linux laptop, phone, tablet, NAS. Still need to set up my Macbook Air.

There are a few devices I need to sync files to.

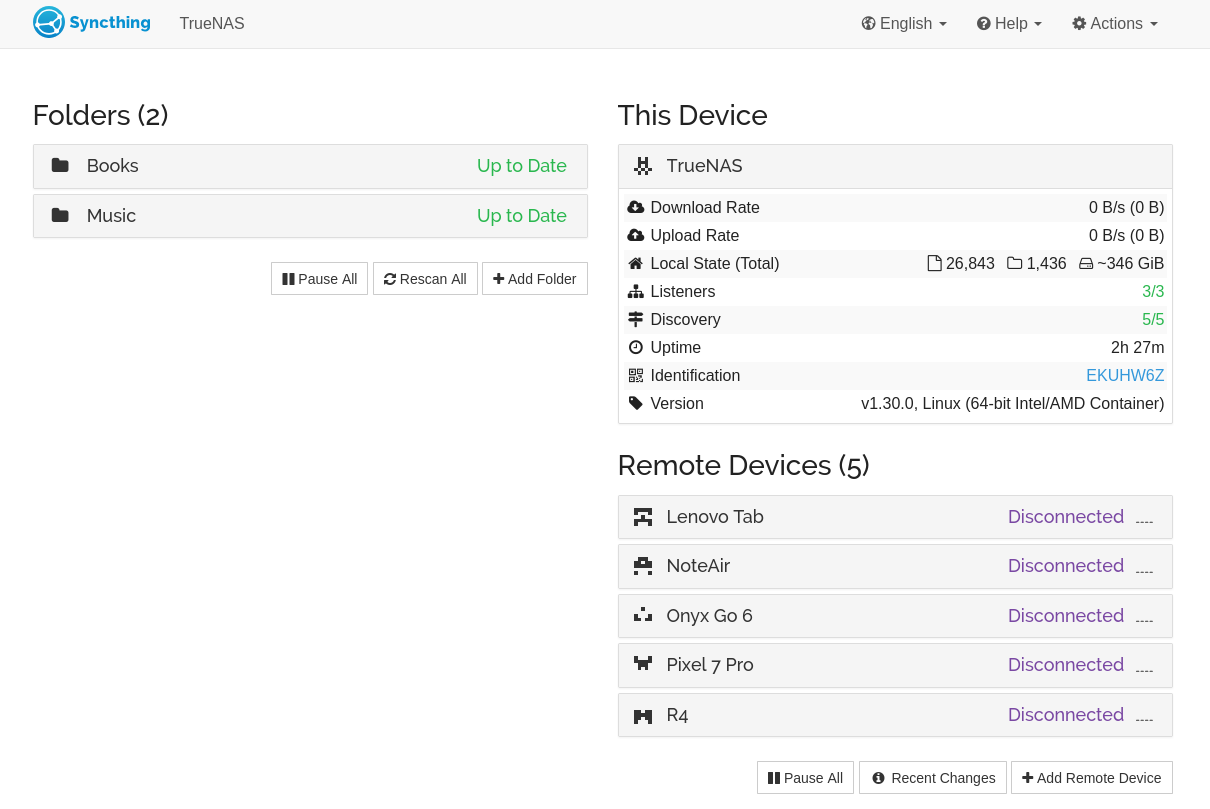

For this I used SyncThing. It syncs the books to the readers and tablets and the music files to the DAP. It was a bit fiddly to set this up, but I got there. I used to use an app called DropSync for this - an Android app that is kind of like rsync for DropBox. It works well, but I’m no longer relying on DropBox, so needed to change this.

One consideration for this was that SyncThing wants to constantly monitor changes and sync constantly. Since all these devices are battery powered, I don’t want this happening 24-7. Also, it’s not like I’m adding books and music at all hours of every day. Maybe 1-2x a week, max. I set it up so that they’ll only connect if they are on AC power. This is great. If I add a book or album to the NAS, I just plug in the device and it will connect and sync.

Here you can see that all my devices are disconnected. By design! I plug one in to AC power, and it will connect.

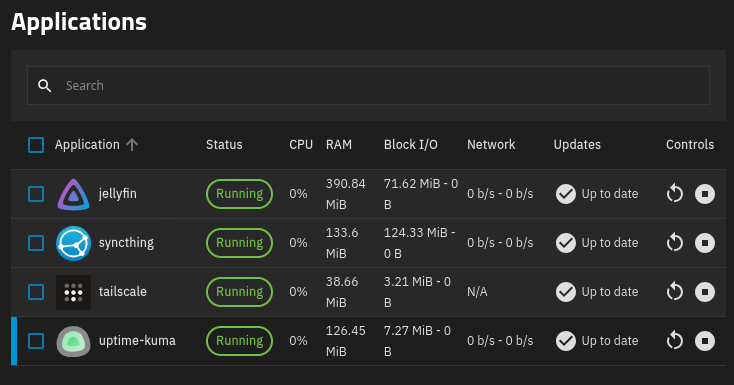

And here are all my apps in TrueNAS as of this writing.

I’ll talk about Uptime Kuma a bit later.

OK, OK, the new drive arrived. Nothing crashed in the meantime. Time to replace it. btw, I ordered some other hardware at the same time. HP does some funky mounting and cable routing in their SFF devices. It’s cramped and you need special screws for the drives to slot in correctly. Up to now, they’ve just been kind of balanced in there. I got the screws and some 90-degree connectors, which made everything a lot better.

Following multiple forms of documentation, I first took the problem drive offline. You just do that in the TrueNAS UI. Then I went in and pulled the drive and plugged in the new one - while the system was running! WTF. I didn’t know this was possible. I know dedicated NASes do this, but figured it was some special magic hardware sauce. No, you can just hotswap a SATA drive - assuming it’s offline or unmounted I guess.

As soon as the drive was offline, my pool warned me that it was in a degraded state. This means that everything will still function, but you should be careful about doing a lot of stuff with files. You now have no parity disk and if something goes wrong, that’s that.

Next, I chose the offline drive and asked to replace it. I selected the new drive as the replacement and that took about a minute. But things were still degraded because this new drive was blank. It had to be “resilvered”. I didn’t really know what that meant, but I have somewhat of an idea now. With the RAIDZ1 array, two drive’s worth of data is spread across three drives. It keeps checksums so that if you lose one whole drive, it can use what it has, plus the checksums to reconstruct any missing data. It does that to reconstruct that new drive. It’s not just copying data, it’s calculating what needs to be there based on the other two drives and checksums. So this takes a LONG time. For my 1.34TiB of data, it took over 12 hours. But when it was done, it was back to full functionality.

Although this was a bit of a pain in the neck to do right in the first week of setting up the NAS, I’m pretty happy it happened when it did. I learned a lot and when that other 2.5" drive goes (which I’m sure it will before long), I’ll know just what’s coming.

I’ve been kind of using DropBox as an offsite backup. But I’m kind of done with it. It’s a constant upsell and pushing all kinds of other new features on you all the time. After looking at a bunch of other cloud storage providers, I’m switching over to pCloud. It’s a bit cheaper than DropBox and it’s very focused on just data storage. Lots of good reviews.

I’ve backed up my NAS there with just a straight sync. This is not really ideal because if I accidentally delete some stuff, it’s going to be deleted in pCloud too. I have 30 days to recover deleted files there, but it’s not the best in the long run. Better than DropBox though.

What I’d like to get going is an actual versioned, progressive backup. I think I might be able to use restic to do that on pCloud. Or maybe there’s a way to do it with rclone, which is what TrueNAS uses by default there. That’s the next thing I want to look into. And probably doing a local restic backup on an external drive as well. Future stuff. At least it’s somewhere else for now.

Also worth considering is that if my backup size goes over 2TB, I’ll have to figure something else out. The vast majority of that is music and movies. And I’m way more concerned about preserving my music. If I lost all my movies tomorrow, I’d actually be kind of ok with it. I might just move those to a local backup only at some point.

There’s a bunch of stuff to schedule in TrueNAS.

First the cloud sync / backup. I have one for my general files and another for all the app configuration stuff, which is all set up in a different data set on the NAS. These will both get synced to pCloud daily. Eventually something more robust as described as above.

Then there are S.M.A.R.T. tests for all the disks. I have those scheduled for once a week.

Finally there is a scrub task, which is a ZFS thing that I only partially understand. But I know that it examines all your stored data along with the checksums to see if anything has been corrupted. If it finds anything, it can generally correct it. So that’s nice. The schedule on that is a bit more complex based on what was set up by default. I’m not messing with that until I understand more.

I set up the timing on all that so each of these things should not be happening while another major taks is happening. I think that’s sensible. You don’t want to be syncing data while your checking your disks or data. I guess.

One thing I was proud of in my previous server setups was the monitoring I set up. Every service and app was checked via cronjobs. If it was running and OK, I’d ping a check I set up on https://healthchecks.io/. If it didn’t get a ping in a certain interval it would start emailing me.

There’s already some alerting built in to TrueNAS. If disks go down or have errors, if S.M.A.R.T. tests fail, and a few other things, I set up alerting to notify me by email.

But what if TrueNAS itself goes down? Power or internet goes out? For that I set up HealthChecks again with a very simple cronjob that pings every so often. If it doesn’t ping, I know about it.

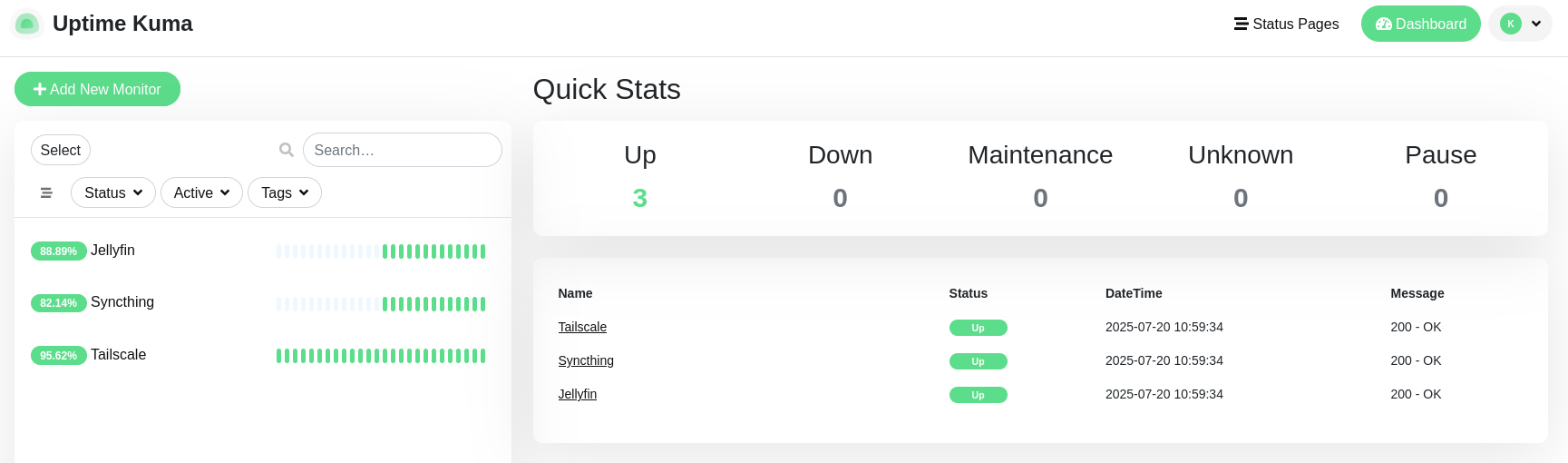

I thought about doing the same for my other apps, but wound up installing Uptime Kuma. This is kind of the opposite idea. HealthChecks is like a “dead man’s drop” - it doesn’t get a ping, it takes action.

Uptime Kuma, on the other hand, pings each of my services regularly, and if that ping fails, it notifies me by email. Here’s my dashboard:

Just realizing I could go on for a while more, but that’s enough for one post. There will be more, probably, later.

Comments? Best way to shout at me is on Mastodon ![]()